Bethany Wittman’s Updates

Update #3: Concerns and Criticisms with AI in Education

There is a great deal of understanding the impact feedback has on student learning. With the invention and increasingly popular use of technology, specifically AI, applying this technology to education seems to provide a variety of benefits such as increased teacher time, more specific and targeted feedback, and increased student learning opportunities. There are however criticisms and concerns about the use of this technology in our classrooms. Some of those criticisms include the exaggerated understanding of AI capabilities, safety concerns in the form of data breaches, and bias. This update will explore those criticisms and concerns.

A significant criticism regarding the use of artificial intelligence (AI) in education is the misunderstanding of its capabilities and the speculative money making benefits that arise from hastily purchasing AI products for schools. Selwyn (2023) argues that the future involving AI remains “uncertain, unpredictable and essentially unknowable.” He notes that there are numerous “profit motivated reasons” for educational institutions to adopt AI; however, the capabilities of AI have often been exaggerated through “intense hyperbole.” The argument is that AI products are “bounded mathematical systems” and cannot quantify every important aspect of education. Selwyn advises that “education audiences are best advised to be circumspect (if not suspicious) of the hype that surrounds the development of emerging AI technologies.” While Selwyn acknowledges that humans also make “biased, illogical and outright bad decisions,” he argues that “being no worse than a human does not justify the adoption of flawed AI technology in an educational setting.” Therefore, it can be inferred that technology should not replace human educators but rather assist them.

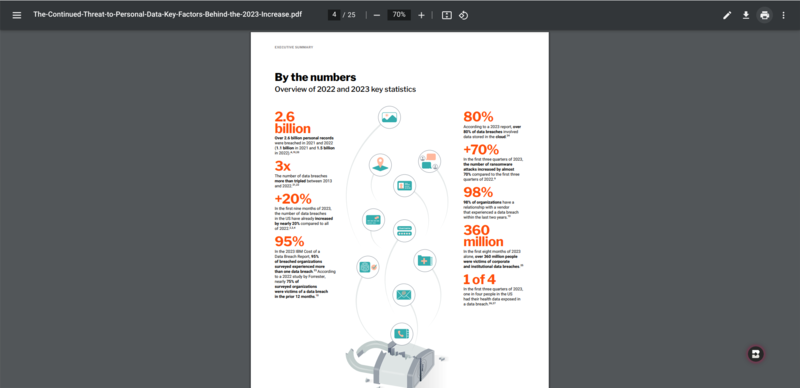

Using AI in education does have safety concerns. In order for AI to generate responses students must interact with it by inputting some form of information. This is especially true when students are asking AI to generate more personalized responses. Ahmad Haidar argues that “the requirement for data input to generate personalized responses raises considerable privacy concerns, as the potential for personal information disclosure exists.” Data breaches are common in today’s highly technological world. According to the Harvard Business Review, “data breaches — in which hackers steal personal data — continue to increase year-on-year: there was a 20% increase in data breaches from 2022 to 2023.” The possibility of a data breach applies to most of us who use the internet or do business with companies that use these types of software products. Haidar continues that “The fear of inaccurate information, data privacy breaches, and the diminishment of crucial personal interactions in the ChatGPT and Generative AI in Educational Ecosystems learning environment that foster rapport and in-depth understanding are prominent among these concerns.” Being cognizant of these safety concerns is one step, but when working in schools with minors, protecting their safety, even data information, is always of the utmost importance. Some states have put into place laws or policies to help protect student information. One example is the State of Illinois. The state has put into place the Student Online Personal Protection Act. According to the Illinois General Assembly, “This Act is intended to ensure that student data will be protected when it is collected by educational technology companies and that the data may be used for beneficial purposes such as providing personalized learning and innovative educational technologies.” There are also federal laws in the United States that pertain to student data privacy such as the Family Educational Rights and Privacy Acts as well as COPPA - Children’s Online Privacy Protection Act, IDEA - Individuals with Disabilities Act, NSLA - National School Lunch Act, PPRA - Protection of Pupil Rights Amendment.

Another concern for using AI in education is surrounding the bias that AI presents. First, it is important to define what is meant by bias. According to Princeton University “Bias is a broad category of behaviors including discrimination, harassment, and other actions which demean or intimidate individuals or groups because of personal characteristics or beliefs or their expression.” One type of bias is gender bias. Research from NIH suggests that Google Translate has made gender biases when translating language surrounding careers. One instance is when Google Translate was asked to translate the Turkish equivalent ““She/he is a nurse” into the feminine form, it also translated the Turkish equivalent of “She/he is a doctor” into the masculine form.” NIH suggests that this creates an “urgent need to introduce students and teachers to the ethical challenges surrounding AI applications in K-12 education and how to navigate them.” There is current research happening about AI bias, as well as available resources and programs for teachers to educate their students about the biases that AI presents.

This research has deepened my understanding of the concerns that surround the use of AI in education. AI is exciting, and for teachers looking for relief from the many tasks pressed upon them, AI in education can seem like a “to good to be true” assistant. It is imperative that we continue to consider the true abilities of AI and not be swayed by the sometimes exaggerated abilities AI appears to have by the companies that are quick to sell or market it to schools. It is also pressing to be vigilant in protecting our student data, especially in the case of minors in our schools. There are current policies and laws enacted to assist in creating the protection. Teachers must also be given time and tools that are developed to teach students and themselves about the biases AI may have in producing information that students will use. This can include a variety of biases, not only the gender and racial biases indicated in the NIH study. This is important when discussing AI in feedback. The feedback that students receive needs to be accurate and free of bias. It must also be generated by a program that guarantees to protect students from data breaches.

https://www.apple.com/newsroom/pdfs/The-Continued-Threat-to-Personal-Data-Key-Factors-Behind-the-2023-Increase.pdf

Akgun S, Greenhow C. Artificial intelligence in education: Addressing ethical challenges in K-12 settings. AI Ethics. 2022;2(3):431-440. doi: 10.1007/s43681-021-00096-7. Epub 2021 Sep 22. PMID: 34790956; PMCID: PMC8455229.

Haidar, A. (2024). ChatGPT and Generative AI in Educational Ecosystems: Transforming Student Engagement and Ensuring Digital Safety. In Preparing Students for the Future Educational Paradigm (pp. 70-100). IGI Global.

Johnson, M.: A scalable approach to reducing gender bias in Google translate. https://ai.googleblog.com/2020/04/a-scalable-approach-to-reducing-gender.html Accessed 26 March 2021

https://inclusive.princeton.edu/addressing-concerns/bias-discrimination-harassment#:~:text=Bias%20is%20a%20broad%20category,or%20beliefs%20or%20their%20expression.

Selwyn, N. (2022). The future of AI and education: Some cautionary notes. European Journal of Education, 57(4), 620-631.

State of Illinois. SCHOOLS (105 ILCS 85/) Student Online Personal Protection Act. https://www.ilga.gov/legislation/ilcs/ilcs3.asp?ActID=3806&ChapterID=17