Franchesca Jones’s Updates

Update 2: Educational Theory

As an English as a Second Language instructor for 13 years and an LDL program student, I have grown to appreciate the significant value and power of formative assessment. I dedicated my research to guide me in transforming my English classes into e-portfolio building journeys in which learners continuously produced artifacts utilizing the English language, and experienced the immeasurable benefits of personalized and collaborative learning paired with recursive feedback. Inspired by images like the below, I came to hold a grudge against summative assessments that aim to rank learners rather than offer "meaningful feedback" (Cope & Kalantzis, 2019).

Recently, I was given a new role as a national testing specialist with a government entity responsible for continuously developing the English language proficiency assessment which is utilized across the country to measure progress and determine eligibility for college entry. With the opportunity to contribute to the improvement of a national summative assessment, I needed to reexamine the summative assessment and understand the value of the summative assessment (outside of the classroom) on a national level. It is evident that the test is still the "primary measure of educational outcomes, learner knowledge and progress, and teacher, school and system effectiveness" also impacting the development of curriculum (Cope & Kalantzis, 2019). Since it seems it will take time for educational systems to embrace the idea of ending traditional summative assessments, how can we begin to take steps towards changing how they are implemented? What role can artificial intelligence play in updating the summative assessment so that they can better align with the principles of Education 2.0? Specifically, what role can artificial intelligence play in improving how we assess language proficiency?

In exploring how AI can transform summative assessments, my aim is to bridge the gap between the formative assessment practices that nurture deep, personalized learning and the summative assessments that remain a cornerstone of educational policy and accountability. By leveraging AI, we can reimagine summative assessments as tools that not only measure but also enhance learning outcomes, paving the way for a more inclusive and effective educational system.

Understanding Artificial Intelligence

Given the limitations of traditional summative assessments, such as their potential to oversimplify student learning into a single score and their reliance on methods that may not fully capture diverse learner abilities, AI offers a promising avenue for innovation. By integrating AI, we can enhance the precision, adaptability, and fairness of summative assessments, ensuring they better align with the principles of Education 2.0 and provide more meaningful insights into learner proficiency

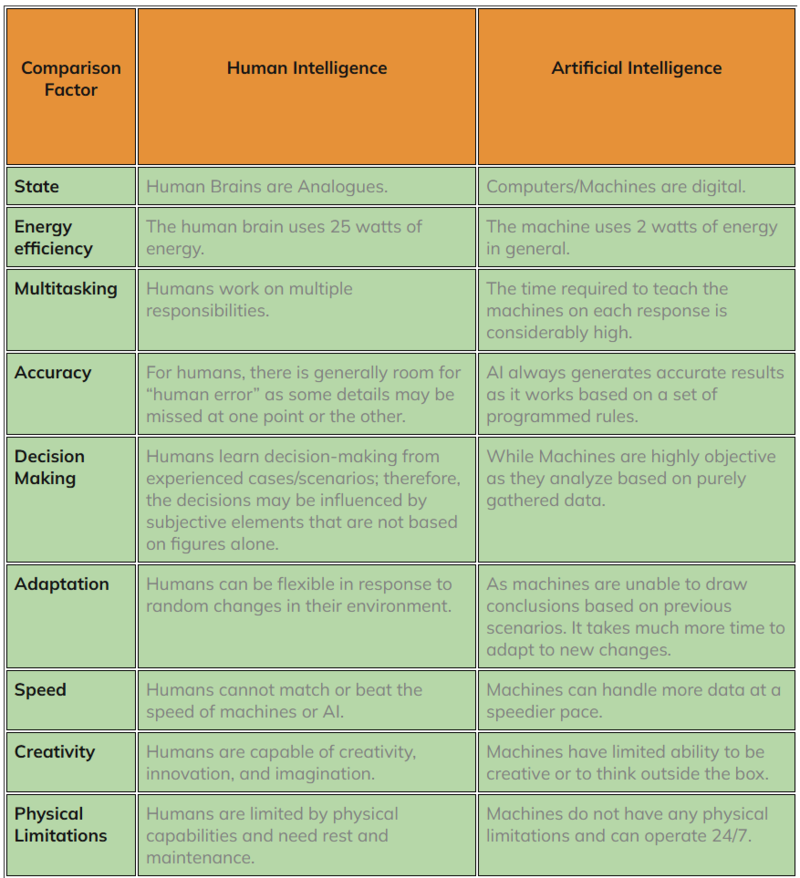

Several articles define artificial intelligence (AI) in ways that suggest it could replace human intelligence, describing it as 'the simulation of human intelligence in machines' (Charpentier-Jiménez, 2024), 'the capability for intelligent behavior once considered unique to humans' (Russell & Norvig, 2020), and as 'intelligent systems that can automate tasks traditionally carried out by humans' (Chaudhry & Kazim, 2021). Another view on artificial intelligence is that it is a “cognitive prosthesis” (Cope & Kalantzis, 2019), that has “the capacity to realize complex goals” (Korteling et al., 2021). Adopting the view that AI is a tool to improve how humans do things, and not as a replacement to humans doing things, is an important distinction to make based on the key differences noted in the table below:

As shown in the table, artificial intelligence can handle more data at a faster pace than humans with the ability to produce accurate results based on programmed rules and without facing the physical limitations that humans do. On the other hand, humans make decisions that may be influenced by subjective elements, not figures alone. Humans are also capable of creativity, innovation and imagination that machines lack. With clarity on how AI can enhance human intelligence, how can these attributes of AI improve assessment?

Improving Assessment Leveraging Artificial Intelligence

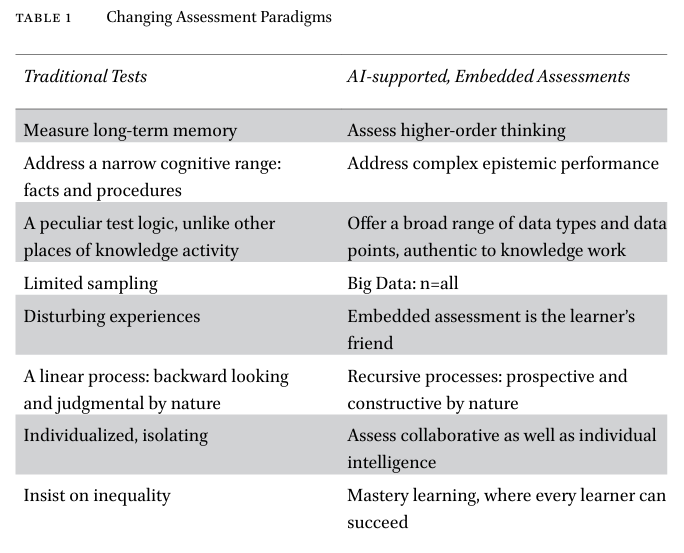

Artificial intelligence (AI) has the potential to transform educational assessments by addressing the limitations and problems characteristic to traditional methods, highlighted in the table below:

Traditional assessments often focus narrowly on evaluating long-term memory and specific cognitive skills, such as recalling facts or applying predetermined procedures. These assessments are typically linear, summative, and individualized, creating a stressful, judgmental environment that emphasizes unequal outcomes and provides only a limited snapshot of a student's capabilities (Mackenzie, 2022; Ryan & Shepard, 2008). This approach fails to capture the full range of a learner's skills and knowledge, often leading to an incomplete understanding of student progress (Pellegrino et al., 2021).

In contrast, AI-supported assessments offer a more comprehensive and dynamic approach. By shifting the focus to higher-order thinking and complex epistemic performance, AI enables continuous, embedded evaluation that supports mastery learning and collaboration (Baker & Smith, 2019; Siemens & Baker, 2013). These systems can process vast amounts of data across multiple points and types, allowing for the delivery of ongoing, constructive feedback, which is essential for fostering an inclusive and equitable learning environment (Luckin, Holmes, Griffiths, & Forcier, 2022; Behrens & DiCerbo, 2013). AI-driven assessments are designed to encourage recursive learning processes, where students continuously improve through formative feedback rather than being judged solely on their performance at a single point in time (Cope & Kalantzis, 2019).

Moreover, AI can enhance the fairness and richness of assessments by evaluating students over extended periods and from an evidence-based, value-added perspective. Tools developed by researchers, such as those at University College London (UCL), incorporate AI to assess students continuously, providing a more nuanced understanding of their learning trajectories. For example, AI-powered assessment tools like computer adaptive and diagnostic select-response tests recalibrate in real-time to align with a learner's current knowledge level. These tools offer specific, actionable feedback on areas of strength and weakness, allowing for a more personalized and effective learning experience (Luckin; Samarakou et al.; Chang, 2015). This approach not only reduces the workload for educators by automating grading processes but also improves the quality and precision of feedback, utilizing advanced techniques such as semantic analysis, voice recognition, and natural language processing.

I'm still struggling with applying these benefits specifically to summative assessment and specifically summative language assessment. I plan on moving forward by focusing specifically on those areas that could readily be applicable such as data transparency.

References:

Baker, R. S., & Smith, L. (2019). Educational Data Mining and Learning Analytics: Proceedings of the International Conference. London: Springer.

Behrens, J. T., & DiCerbo, K. E. (2013). Technological implications for assessment ecosystems. In E. W. Gordon (Ed.), The Gordon Commission on the Future of Assessment in Education: Technical Report (pp. 101–122). Princeton, NJ: The Gordon Commission.

Charpentier-Jiménez, W. (2024). Assessing artificial intelligence and professors’ calibration in English as a foreign language writing courses at a Costa Rican public university. Actualidades Investigativas en Educación, 24(1), 533-557. https://doi.org/10.15517/aie.v24i1.55612

Cope, B., & Kalantzis, M. (2019). Education 2.0: Artificial Intelligence and the End of the Test. Technology, Knowledge and Learning, 24(3), 419-434. https://doi.org/10.1007/s10758-018-9372-5

González-Calatayud, V., Prendes-Espinosa, P., & Roig-Vila, R. (2021). Artificial intelligence for student assessment: A systematic review. Applied Sciences, 11(12), 5467. https://doi.org/10.3390/app11125467

Korteling, J. E., van de Boer-Visschedijk, G. C., Blankendaal, R. A. M., Boonekamp, R. C., & Eikelboom, A. R. (2021). Human versus artificial intelligence. Frontiers in Artificial Intelligence, 4. https://doi.org/10.3389/frai.2021.XXXXXX

Luckin, R., Holmes, W., Griffiths, M., & Forcier, L. B. (2022). Artificial Intelligence and the Future of Testing in Education. London: Pearson.

Mackenzie, R. (2022). Assessment 4.0: Reimagining Education in the Age of AI. New York, NY: Routledge.

Naukri.com. (n.d.). [Image showing differences between artificial intelligence and human intelligence]. In Differences between artificial intelligence and human intelligence. Naukri.com. https://www.naukri.com/code360/library/differences-between-artificial-intelligence-and-human-intelligence

Pellegrino, J. W., Chudowsky, N., & Glaser, R. (Eds.). (2021). Knowing What Students Know: The Science and Design of Educational Assessment (2nd ed.). Washington, DC: National Academies Press.

Pett, J. (n.d.). For a fair selection, everybody has to take the same exam: Please climb that tree [Editorial cartoon]. Retrieved from. https://annakolm.pl/276/fair-selection/

Russell, S. J., & Norvig, P. (2020). Artificial intelligence: A modern approach (4th ed.). Pearson.

Ryan, K. E., & Shepard, L. A. (Eds.). (2008). The Future of Test-Based Accountability. New York, NY: Routledge.

Siemens, G., & Baker, R. S. J. D. (2013). Learning analytics and educational data mining: Towards communication and collaboration. American Behavioral Scientist, 57(10), 1380–1400.