Meaning Patterns’s Updates

Unicode, Emojis, and the New Meaning of "Text" in the Digital Era

Text. Meanings made by combining graphemes, the elemental, character-level components of writing, as documented in Unicode. Graphemes can be phonemic, referencing sounds, or ideographic, referencing an idea or material thing.

The textual form§AS1.4.7 is represented and communicated in written script. Many definitions of text are broader than this. Images, for instance, are by some conceived as text, or an integral part of text. Our conversations might be conceived as texts. If we went broader still, metaphorically speaking, life is a text. But by this time we are eliding just too many things for the word “text” to be particularly helpful.

We want to narrow our definition because there are some important distinctions that we would like to make between the various meaning forms, and particularly between writing and speech. Writing is very different from speech, in fact as different from speech as it is from image. Of course there are parallels, and that is what this grammar of multimodality is about – the major functional parallels that we call reference, agency, structure, context, and interest. But the parallels between speech and writing are no stronger than any of the others.

For the digital age, we can rely on a very precise definition of textual form: those meanings that can be encoded using the elemental components of meaning-rendering documented in Unicode.§0.2.1a

The elemental component of text is a grapheme, the minimally meaningful constrastive unit in text. Graphemes can refer either to a sound (a phoneme, such as the letter “a”), or to an idea or a material thing (an ideograph, such as the number “7,” a Chinese character, an emoji).

* * *

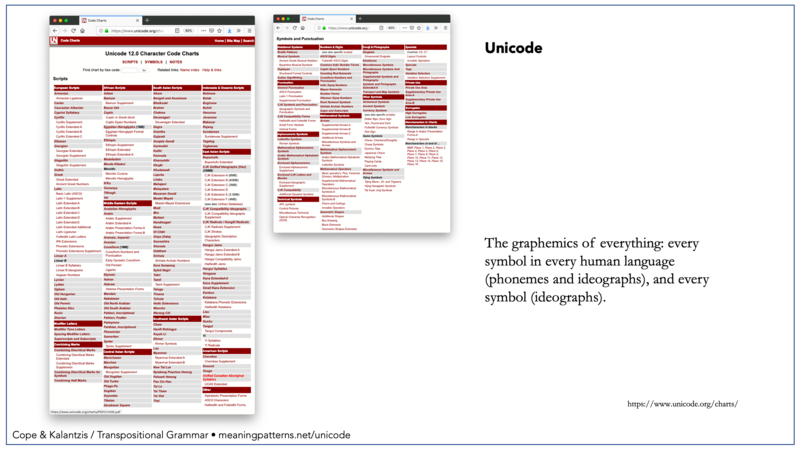

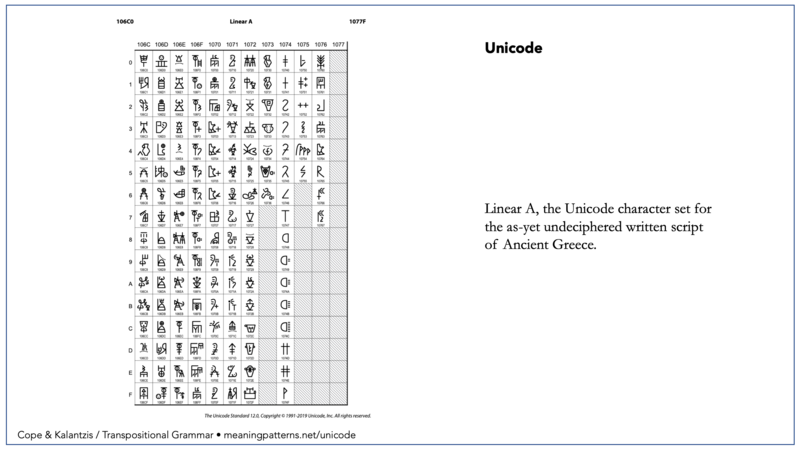

Unicode is a comprehensive character set documenting all systems of written text.31 Of the 136,755 characters in Unicode version 10, a few are: a, A, 7, ?, @, 威, ي and ☺. For writing that is principally alphabetic, graphemes mostly represent sounds. In other scripts characters mainly represent ideas, such as in Chinese where each character is an ideograph – although the distinction is not so clear, because for writers in mainly alphabetic languages 7, @ and ☺ are ideographs, and utterable sounds can be represented in Chinese. Unicode includes symbolic scripts that range from Japanese dentistry symbols to the recycle symbol, and 2,623 emojis.§AS1.1.1a It also includes obscure historical scripts, like Linear A, an as-yet undeciphered written script of Ancient Greece.

Unicode was a name coined by Joseph Becker in 1988, a researcher in the Xerox lab in Palo Alto, California.32 In 1984, he had written a seminal article for Scientific American on multilingual word processing,33 and in 1987–88 was working with a colleague at Xerox, Lee Collins, and Mark Davis at Apple. A Unicode Working Group formed in 1989, Microsoft, Sun, IBM, and other companies soon joining, creating today’s Unicode Consortium in 1991. At its first release in October 1991, Unicode consisted of 7,161 characters. The June 2017 release added 8,518 new characters, bringing the total to 136,755.34

In a manner that has become typical of the global governance of digital meanings, companies have eschewed competition to form non-profits that they control so singular and universal agreement can be reached about the means of production, distribution, and exchange of meanings. Representatives from Facebook, Google, Amazon, and Adobe are now on the Unicode Consortium’s board of directors.35

The details of the Unicode standards have been thrashed out in volunteer subcommittees and conferences since 1991, but the basis of their work is to catalogue thousands of years of human writing practices, as well as new practices such as the use of emojis.§AS1.1.1a The participants argue the merits of new candidates for the compendium in obsessively careful detail. The end result is that, for all our apparent uncertainties about meaning in the modern world, there is just one definitive list of the elementary components of all writing, ever – and this is it.

Today, everybody uses Unicode on their phones, web browsers, and word processors. Only the aficionados argue, but even then, the arguments are limited to details and are readily resolvable by consensus. Such are the manners that the players are sometimes able to keep in the backrooms of digital governance.

Most Unicode characters are ideographs; just a few are phonemes, even if the relatively few phonemes in the character set still get a lot of use. By the time we reach dentistry symbols and emojis in Unicode, we encounter ways of meaning that are getting close to image. There has been a tendency in the digital age to move our character set towards ideographs – not only numbers, but symbols and emojis of internationalization in our contemporary multilingual spaces, the navigational icons in user interfaces, and visual design that marks the larger textual architectures of the page and the screen. “We are all becoming Chinese,”36 says Jack Goody,§AS1.4.7a anthropologist of writing, flowers, and love, if only half in jest.

One of the underlying reasons for this convergence with image is that the digitized images of characters are manufactured in the same way as all other images, by the composition of pixels. These are invisible to the casual viewer, only to become visible as conceptual wholes – as a character in writing or a picture. The fact that writing and images are today made of the same stuff brings them closer together for the most pragmatic of reasons in the media of their manufacture and distribution. They can easily sit beside each other on the same screen or digitally printed surface, or they can layer over each other.

These are some of the mundanely practical reasons why, in the era of digital meaning, we put text beside image in our listing of meaning forms, and not speech as might be conventionally expected. And there are other reasons, the most profound of which is that we see writing but hear speech. Writing is close to image, whereas speech is close to sound. This has always been the case. But today the conjunctions are closer than ever, providing a practical rationale for why today we need to disaggregate “language” in a grammar of multimodality.

* * *

Likeness. The materialization of a meaning in its context by visual, sounded, tangible, gestural similitude – never the same, because the time, place, and available resources for materialization are inevitably different in some respect, though similar enough to spark recognition.

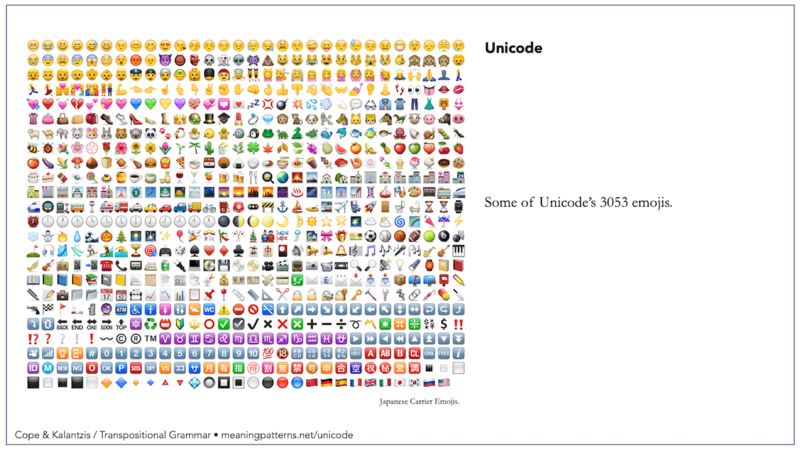

Crossing the gamut of multimodality, likeness includes photographs, imitative gestures, onomatopoeia, and objects and spaces whose functions are self-evident. With “that which can be represented in Unicode” as our definition of text, Unicode has many iconic ideographs that are likenesses, now including 3,053 emojis.§1.1.1a

* * *

A smiling face and a heart were among the first emojis to be included in the Unicode character set,§MS0.2.1a added to version 1.1 for its release in 1993.35 Subsequent releases of Unicode have added more, bringing the total to 3,053 in version 12.0.36 The word “emoji” is Japanese for pictograph, and an “emoticon” is a kind of emoji that expresses feeling, a contraction of the words emotion + icon.37 The modern origins of pictographic imagery can be traced to Otto Neurath and Marie Reidemeister’s groundbreaking work in the 1920s at the Vienna Museum of Society and Economy.§MS1.1.3h

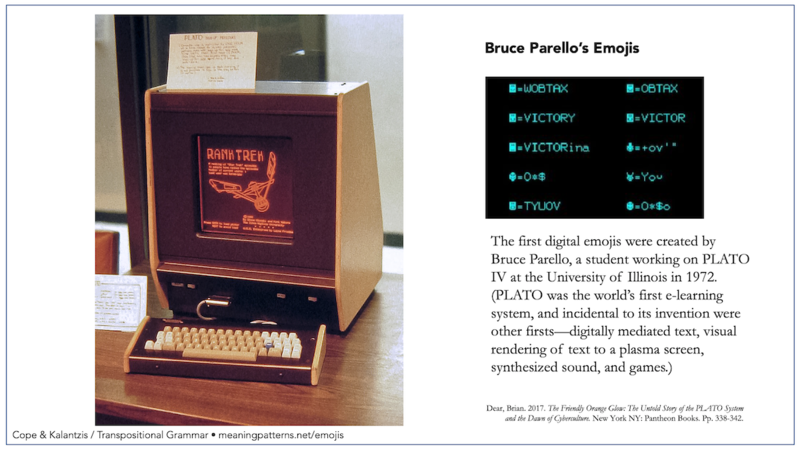

The first digital emojis were created by Bruce Parello, a student working on PLATO IV at the University of Illinois in 1972.38 (PLATO was the world’s first e-learning system, and incidental to its invention were other firsts – digitally mediated text, visual rendering of text to a plasma screen, synthesized sound, and games.39)

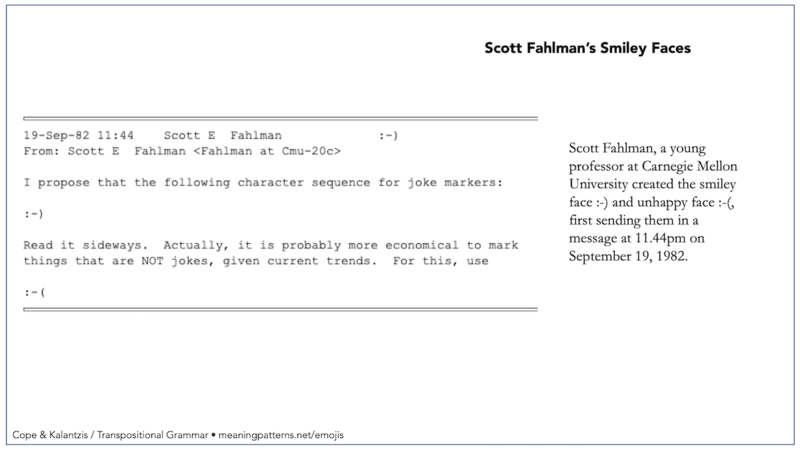

Scott Fahlman, a young professor at Carnegie Mellon University created the smiley face :-) and unhappy face :-(, first sending them in a message at 11.44pm on September 19, 1982.40

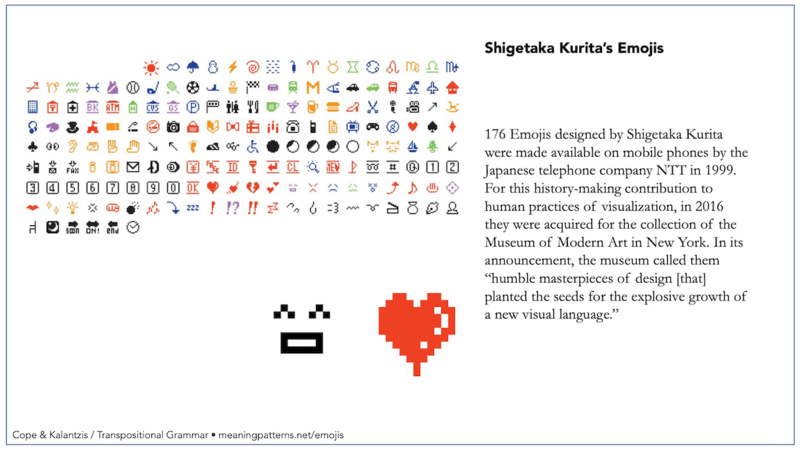

One hundred and seventy-six emojis designed by Shigetaka Kurita were made available on mobile phones by the Japanese telephone company NTT in 1999. For this history-making contribution to human practices of visualization, in 2016 they were acquired for the collection of the Museum of Modern Art in New York. In its announcement, the museum called them “humble masterpieces of design [that] planted the seeds for the explosive growth of a new visual language.”41 Emojis were added to Microsoft Windows in 2008, the Apple OS in 2011, Google Android in 2013. Facebook launched its “Like” emoji on 9 February 2009,42 and added other so-called “reactions” in 2016.43

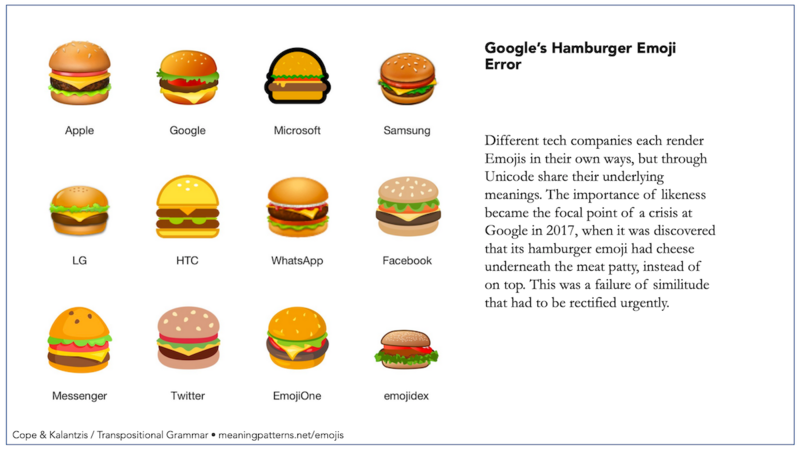

Different tech companies each render emojis in their own ways, but through Unicode share their underlying meanings. The importance of likeness in so many emojis became the focal point of a crisis at Google in 2017, when it was discovered that its hamburger emoji had cheese underneath the meat patty, instead of on top. This was a failure of similitude that had to be rectified urgently.44

Text today – defined by this grammar as that which is represented in Unicode – includes materializations of meaning that cross icon (such as emojis and dentistry symbols), directedness (such as arrows, warning signs), and abstractions (including the letters of phonic alphabets that only conventionally and arbitrarily mean the sounds they reference). Some emojis are directed signs or abstract symbols, but most are likenesses. Today, people use likeness emojis more and more. As we begin to type a word on a phone, suggestive text prompts us to use an emoji instead.

Moving beyond the constraints of abstract and arbitrary phonemes and graphemes, in the era of total globalization, emojis of likeness have burgeoned to become a universal, translingual semantics. Here is another place where text is diverging still further from speech, using more likenesses and fewer abstractions. Though of course, even if more universal for their divergence from speech, they are not without cross-cultural weirdnesses45 and divergent interpretations.46

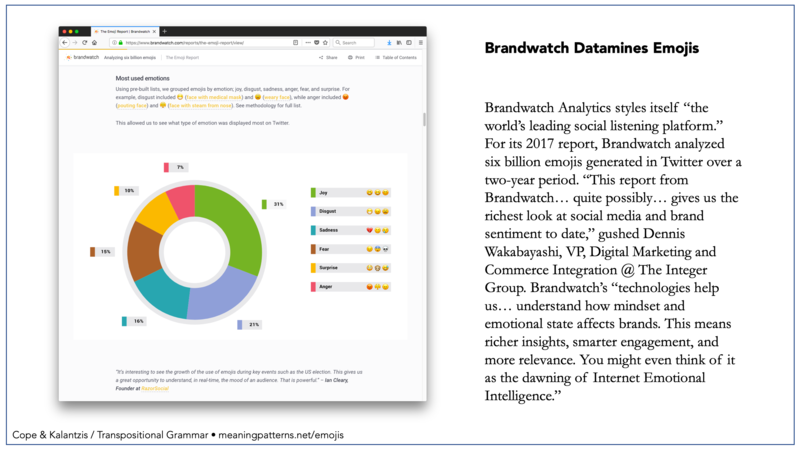

Emojis expressed in Unicode have become useful to marketeers wanting to know how people feel in the global marketplace. The universal semantics standardized in Unicode helps them to do this – in aggregate, not to mention the finely grained knowing of us all individually.

Brandwatch Analytics styles itself “the world’s leading social listening platform.” For its 2017 report, Brandwatch analyzed six billion emojis generated in Twitter over a two-year period. “This report from Brandwatch … quite possibly … gives us the richest look at social media and brand sentiment to date,” gushed Dennis Wakabayashi, VP, Digital Marketing and Commerce Integration @The Integer Group. Brandwatch’s “technologies help us … understand how mindset and emotional state affects brands. This means richer insights, smarter engagement, and more relevance. You might even think of it as the dawning of Internet Emotional Intelligence.”47

- Cope, Bill and Mary Kalantzis, 2020, Making Sense: Reference, Agency and Structure in a Grammar of Multimodal Meaning, Cambridge UK: Cambridge University Press, pp. 23-25.

- Kalantzis, Mary and Bill Cope, 2020, Adding Sense: Context and Interest in a Grammar of Multimodal Meaning, Cambridge UK: Cambridge University Press, pp. 32-35. [§ markers are cross-references to other sections in this book and the companion volume (MS/AS); footnotes are in this book.]

Wow! This is so absolutely fascinating! It becomes so apparent when try to transcribe my case study interviews... When I talk to my study participants in our Zoom conversations, it all makes perfectly good sense, our interaction is rational and logical to both of us engaged in the conversation. But, once I try to transcribe the conversation and put it in writing, the end result is very often barely readable, and will likely be hard to follow by someone who encounters the written text for the first time.

I think we often tend to think of writing as a representation of the spoken language, and yet when we are faced with the task of preparing for a presentation or webinar (as I am doing right now at work), we realize that if we try to deliver the information as it was written on our notes, it will sound stilted and awkward.

No! speaking and writing are definitely two very different ways of conveying meaning. Unicode, emojis, emoticons, and new technological advances now enable us to adapt, and expand text to include a growing number of pictographs, and allowing text and images to be displayed side by side - so when you describe the hills and scenery of Greece's landscape; or the white chairs, so ubiquitous in Greek cafes, you can simply display a photograph right next to the describing paragraph.

And yet, in many schools and especially academic writing, we continue to undervalue multimodality and insist on the supremacy of the written text - to the exclusion of other forms. So, perhaps we really need to expand our definition of Text, to include images, and video, and sound - now all of them playing side by side on our computer screen!